Muser

Smart music visualizer

Muser is an experiment in using machine learning to enhance music visualization. The idea originated while writing a seminar paper on the history of music visualization. I researched, designed and built the project as part of my studies.

A pre-trained neural network called musicnn predicts the musical genre for each second of a song. The predictions are then used to generate a color scheme. The final visualization color palette is based on the 5 most-fitting genres.

We can use this to visualize how the style of a song changes over time:Love the Way You Lie / Eminem feat. Rihanna

For example, we can see that this classic song by Eminem and Rihanna starts with an acoustic intro (bluish) then alternates between rap (red) and pop (yellow) segments.

For more information visit the project website

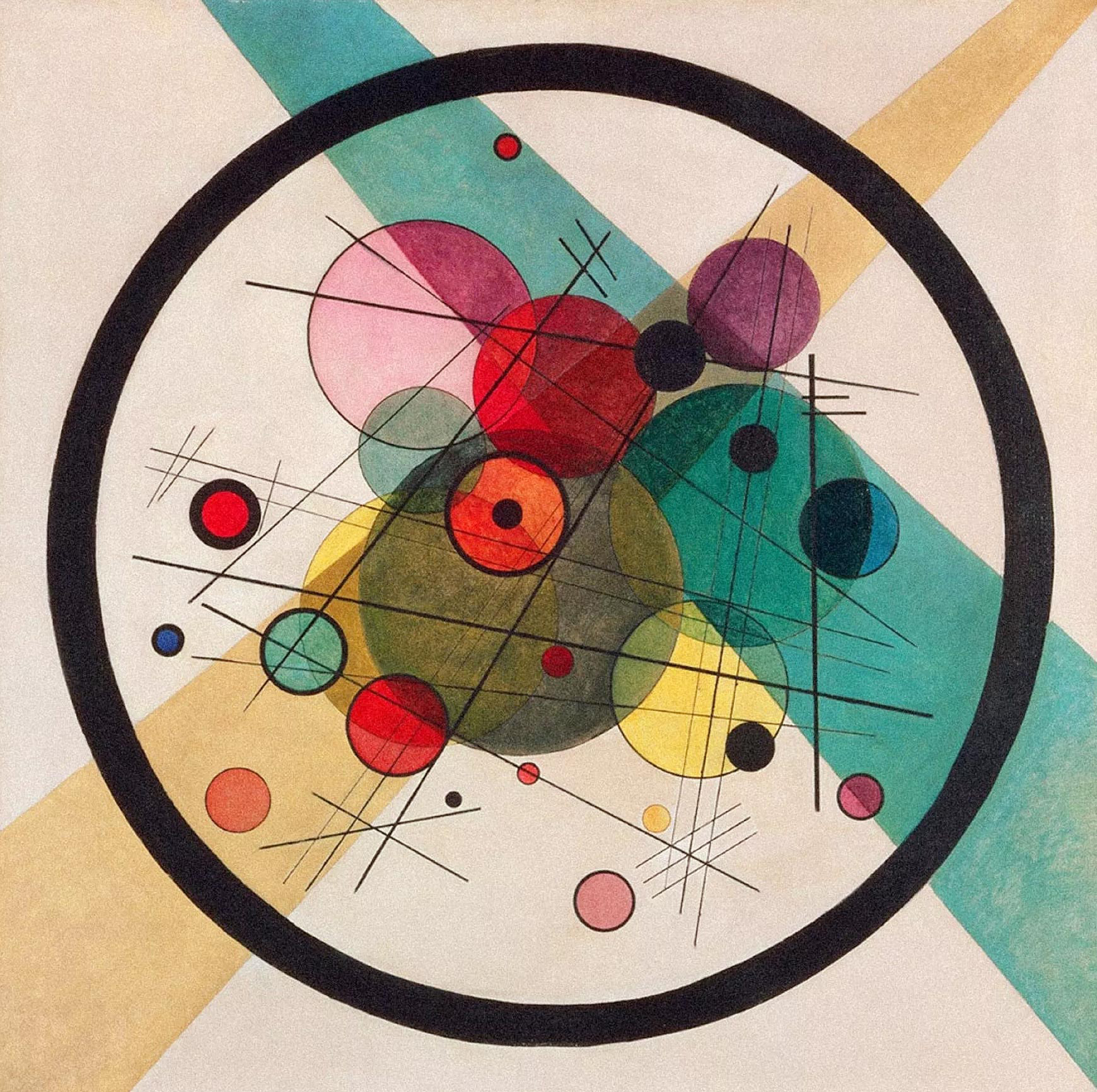

kandinsky Circles in a Circle by Wassily Kandinsky, 1923 Colors for each genre were chosen according to the Musicmap project so that music genres which are stylistically closer will get similar colors.

Muser is inspired by Wassily Kandinsky (1866-1944). Generally credited as the pioneer of abstract art, his work is well-known for its musical influences. Kandinsky associated specific tones and instruments to shapes and colors, thus “visualizing” a musical composition.

Widgets Bar →Extension toolbar for Apple Safari